It’s the moment you’ve been eagerly anticipating: Google I/O keynote day! Google begins its annual developer conference with a flurry of announcements, unveiling many of its latest projects and innovations. Brian has already set the stage by outlining our expectations.

If you didn’t have the chance to watch the entire two-hour presentation on Tuesday, don’t worry. We’ve got you covered with a quick summary of the major announcements from the keynote, presented in an easy-to-read, easy-to-skim list.

Privacy concerns arise regarding AI voice call scans

Google presented a demonstration of a call scam detection feature at I/O, stating that it will be included in a future version of Android. This feature uses AI to scan voice calls in real-time, a method known as client-side scanning. However, this approach has previously faced backlash on iOS, leading Apple to abandon its plans to implement it in 2021. As anticipated, several privacy advocates and experts have expressed concerns about Google’s use of this technology, warning that it could extend beyond scam detection to more nefarious purposes.

Updated security features

Google unveiled new security and privacy enhancements for Android on Wednesday. These include on-device live threat detection to identify malicious apps, improved safeguards for screen sharing, and enhanced security against cell site simulators.

Google is boosting the on-device functionality of its Google Play Protect system to identify fraudulent apps attempting to access sensitive permissions. Additionally, AI is employed to identify unauthorized attempts by apps to interact with other services and apps.

If the system detects clear signs of malicious behavior, it automatically disables the app. Otherwise, it alerts Google for further review and then notifies users.

Google TV

Google has integrated its Gemini AI into its Google TV smart TV operating system to generate descriptions for movies and TV shows. When a description is absent on the home screen, the AI will automatically provide one, ensuring viewers always have context about a title. Additionally, it can translate descriptions into the viewer’s native language, enhancing content discoverability. What’s more, the AI-generated descriptions are personalized based on a viewer’s genre and actor preferences.

Private Space feature

Private Space is a new Android feature that allows users to create a segregated section of the operating system for sensitive information. It functions similarly to Incognito mode for mobile, isolating specified apps into a “container.”

Accessible from the launcher, Private Space can be locked with an additional layer of authentication. Apps within Private Space are hidden from notifications, settings, and recent apps. However, users can still access these apps through a system sharesheet and photo picker in the main space, provided the Private Space is unlocked.

While developers can currently experiment with Private Space, there is a bug that Google intends to address in the near future.

Google Maps gets geospatial AR

Google Maps users will soon gain access to a new layer of content on their smartphones — geospatial augmented reality (AR) content. Initially launching in Singapore and Paris as part of a pilot program later this year, this feature will allow users to access AR content through Google Maps.

To access the AR content, users first need to search for a location in Google Maps. If the location has AR content available and the user is near the place, they can tap on the image labeled “AR Experience” and then raise their phone.

For users exploring a place remotely, they can view the same AR experience in Street View. After exploring the AR content, users can share the experience through a deep link URL or QR code on social media.

Wear OS 5

Google offered developers a preview of its upcoming smartwatch operating system, Wear OS 5. This new version emphasizes enhancements in battery life and overall performance, including more efficient workout tracking. Developers can also expect updated tools for crafting watch faces, alongside new iterations of Wear OS tiles and Jetpack Compose for constructing watch apps.

“Web” search filter

Google has introduced a new feature that allows users to filter for text-based links exclusively. The new “Web” filter appears at the top of the results page, enabling users to refine their search results to show only text links, similar to how they can currently filter for images, videos, news, or shopping results.

This update reflects an acknowledgment that there are times when users may prefer to see traditional text-based links to web pages, known as classic blue links. These links are often considered secondary as Google tends to provide direct answers through its Knowledge Panels or through AI-driven experiments.

Firebase Genkit

Firebase has introduced a new addition to its platform called Firebase Genkit. This tool is designed to simplify the development of AI-powered applications in JavaScript/TypeScript, with Go support expected soon. Genkit is an open-source framework licensed under Apache 2.0, allowing developers to easily integrate AI capabilities into both new and existing applications.

The company is highlighting several use cases for Genkit, including content generation and summarization, text translation, and image generation, which are common applications of AI.

Generative AI for learning

Today, Google unveiled LearnLM, a new series of generative AI models developed in collaboration between Google’s DeepMind AI research division and Google Research. These models, fine-tuned for learning, are designed to provide conversational tutoring to students across various subjects, according to Google.

While LearnLM is already available on several of Google’s platforms, the company is launching a pilot program in Google Classroom. Additionally, Google is collaborating with educators to explore how LearnLM could streamline and enhance the lesson planning process. LearnLM has the potential to help teachers discover new ideas, content, and activities, as well as find materials tailored to the specific needs of their students.

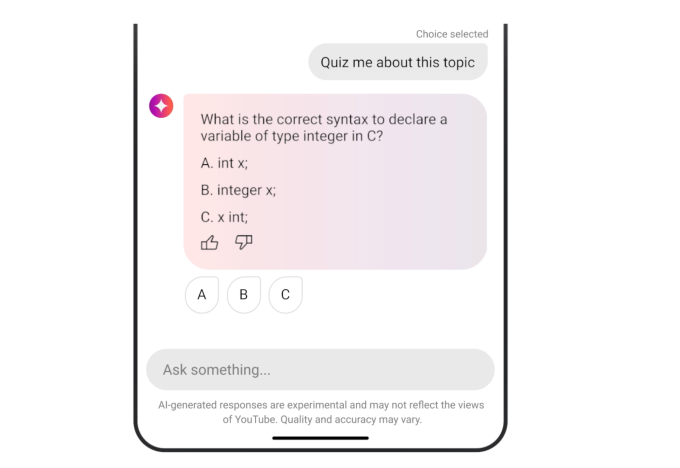

Quiz master

YouTube has introduced AI-generated quizzes as a new feature. This conversational AI tool enables users to interact with educational videos by asking clarifying questions, receiving explanations, or taking quizzes on the subject matter.

This new tool will be particularly beneficial for those watching longer educational videos, such as lectures or seminars, as it leverages the Gemini model’s long-context capabilities. These features are currently rolling out to select Android users in the U.S.

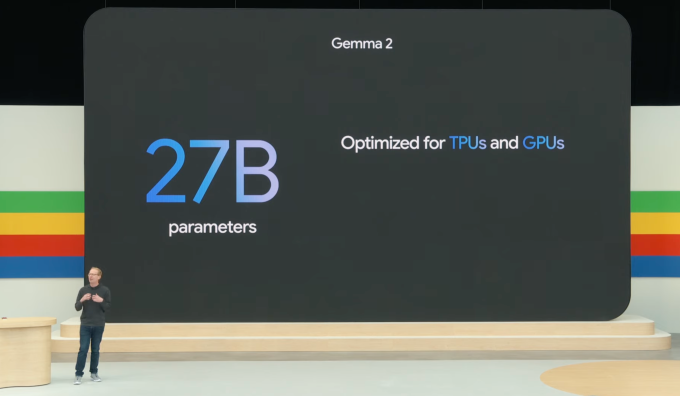

Gemma 2 updates

In response to developer feedback, Google is expanding its Gemma model by introducing a new 27-billion-parameter model to Gemma 2. This upgraded Gemma model is set to launch in June. Optimized by Nvidia, this new model size is designed to run efficiently on next-generation GPUs, as well as on a single TPU host and vertex AI, according to Google.

Google Play

Google Play is receiving several updates aimed at improving app discovery, user acquisition, and developer tools. These updates include a new discovery feature for apps, additional ways to acquire users, enhancements to Play Points, and improvements to developer-facing tools such as the Google Play SDK Console and Play Integrity API.

One highlight for developers is the Engage SDK, which will allow app creators to present their content to users in a personalized, full-screen, immersive experience. It’s important to note that this feature is not visible to users currently.

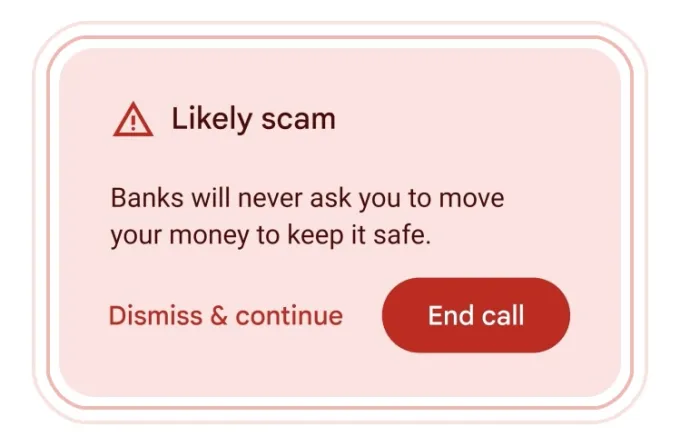

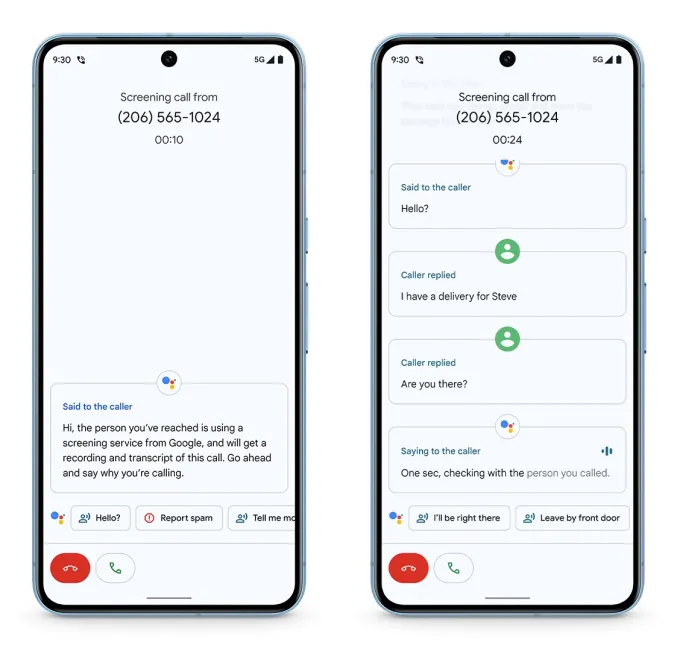

Detecting scams during calls

On Tuesday, Google provided a preview of a new feature designed to alert users to potential scams during phone calls. This feature, to be integrated into a future version of Android, leverages Gemini Nano, the smallest version of Google’s generative AI, which can operate entirely on-device. The system listens for “conversation patterns commonly associated with scams” in real time.

For instance, if someone impersonates a “bank representative” or uses common scam tactics like requesting passwords or gift cards, the system will be triggered. While these tactics are widely recognized as attempts to extract money, many people worldwide remain vulnerable to such scams. When activated, the system will display a notification warning the user that they may be targeted by fraudulent individuals.

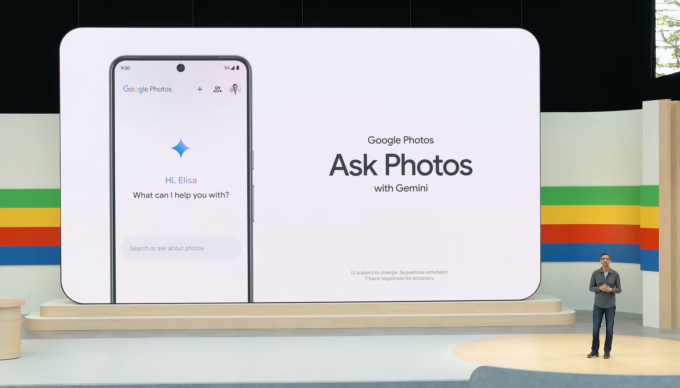

Ask Photos

Google Photos is receiving an AI-powered enhancement with the introduction of an experimental feature called Ask Photos, powered by Google’s Gemini AI model. This new feature, set to roll out later this summer, enables users to search their Google Photos collection using natural language queries that leverage AI’s understanding of their photo’s content and other metadata.

While users previously could search for specific people, places, or things in their photos, the AI upgrade will make finding the right content more intuitive and less manual.

As an example, users could search for a charming duo named “Golden Stripes,” consisting of a tiger stuffed animal and a Golden Retriever band.

All About Gemini

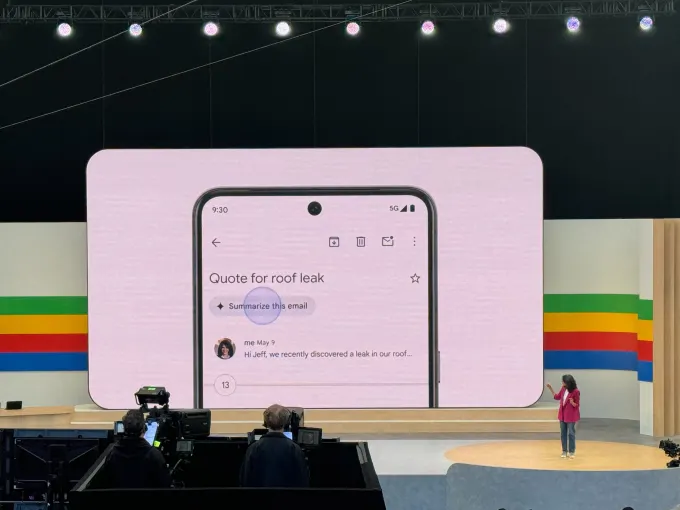

Gemini in Gmail

Gmail is integrating its Gemini AI technology to enable users to search, summarize, and draft emails more efficiently. Additionally, Gemini AI will assist with more complex tasks, such as processing e-commerce returns. For example, it can search your inbox for the receipt and fill out an online form on your behalf.

Gemini 1.5 pro

In a recent enhancement to the generative AI, Gemini now has the ability to analyze longer documents, codebases, videos, and audio recordings compared to its previous capabilities.

During a private preview of the upcoming version of Gemini 1.5 Pro, the current flagship model of the company, it was disclosed that it can now process up to 2 million tokens, which is twice the previous maximum capacity. This enhancement makes the new version of Gemini 1.5 Pro the model with the largest input support among commercially available options.

Gemini Live

The company introduced a new feature in Gemini called Gemini Live, which enables users to engage in “in-depth” voice conversations with Gemini on their smartphones. Users have the ability to interrupt Gemini while it’s speaking to ask clarifying questions, and it will adjust to their speech patterns in real time. Additionally, Gemini can view and respond to users’ surroundings using photos or videos captured by their smartphones’ cameras.

While Live may not appear to be a significant advancement over existing technology at first glance, Google asserts that it leverages newer techniques from the generative AI field to offer superior and less error-prone image analysis. These techniques are combined with an enhanced speech engine to provide more consistent, emotionally expressive, and realistic multi-turn dialogues.

Gemini Nano

Google has announced the development of Gemini Nano, its smallest AI model, which will be integrated directly into the Chrome desktop client, beginning with Chrome 126. This integration will allow developers to utilize the on-device model to enhance their own AI features. Google intends to leverage this new capability to enhance features such as the “help me write” tool from Workspace Lab in Gmail.

Gemini on Android

Google’s Gemini on Android, its AI replacement for Google Assistant, will soon leverage its deep integration with Android’s mobile operating system and Google’s apps. This integration will allow users to directly drag and drop AI-generated images into applications like Gmail, Google Messages, and others. Additionally, YouTube users will have the ability to tap “Ask this video” to extract specific information from within a YouTube video, according to Google.

Gemini on Google Maps

The capabilities of the Gemini model are being integrated into the Google Maps platform for developers, beginning with the Places API. This integration enables developers to display generative AI summaries of places and areas within their own applications and websites. These summaries are generated based on Gemini’s analysis of insights gathered from Google Maps’ community, which comprises over 300 million contributors. One notable improvement is that developers will no longer need to create their own custom descriptions of places.

Tensor Processing Units receive a performance upgrade

Google has introduced the sixth generation of its Tensor Processing Units (TPU) AI chips, named Trillium, set to be released later this year. This announcement continues the tradition of unveiling the next generation of TPUs at I/O, even though the chips are not available until later in the year.

The new TPUs will offer a 4.7x increase in compute performance per chip compared to the fifth generation. Additionally, Trillium includes the third generation of SparseCore, described by Google as “a specialized accelerator for processing ultra-large embeddings common in advanced ranking and recommendation workloads.”

AI in search

Google is enhancing its search capabilities with more AI features, aiming to address concerns about losing market share to competitors like ChatGPT and Perplexity. In the U.S., the company is introducing AI-powered overviews for users. Moreover, Google intends to utilize Gemini as an agent for tasks such as trip planning.

In a further development, Google plans to use generative AI to organize entire search results pages for certain queries. This is in addition to the existing AI Overview feature, which provides a concise snippet with aggregated information about a searched topic. The AI Overview feature will become generally available on Tuesday, following a period in Google’s AI Labs program.

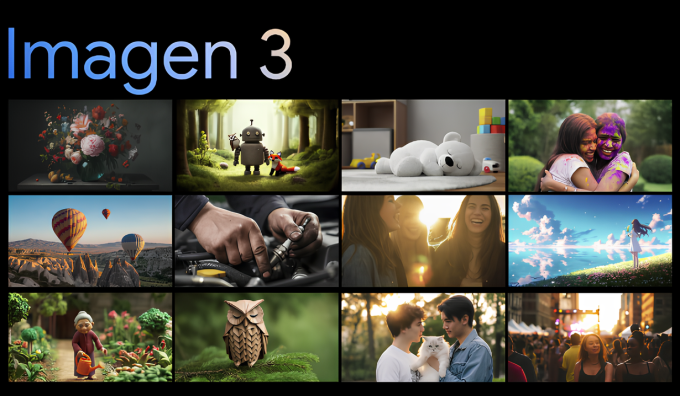

Generative AI upgrades

Google has unveiled Imagen 3, the newest addition to its Imagen generative AI model series. Demis Hassabis, CEO of DeepMind, Google’s AI research division, highlighted that Imagen 3 demonstrates improved accuracy in understanding text prompts translated into images compared to its predecessor, Imagen 2. He also noted that Imagen 3 is more “creative and detailed” in its generated images while producing fewer “distracting artifacts” and errors.

Hassabis further stated, “This is our best model yet for rendering text, which has been a challenge for image generation models.”

Project IDX

Project IDX, the company’s next-gen, AI-centric browser-based development environment, is now in open beta. With this update comes an integration with the Google Maps Platform into the IDE, helping add geolocation features to its apps, as well as integrations with the Chrome Dev Tools and Lighthouse to help debug applications. Soon, Google will also enable deploying apps to Cloud Run, Google Cloud’s serverless platform for running front- and back-end services.

Veo

Google is stepping up its competition against OpenAI’s Sora with Veo, an AI model capable of generating 1080p video clips approximately one minute in length based on a given text prompt. Veo is designed to capture various visual and cinematic styles, such as landscapes and time lapses, and can also edit and adjust previously generated footage.

This development builds upon Google’s earlier commercial endeavors in video generation, which were previewed in April. These efforts utilized the Imagen 2 family of image-generating models to create looping video clips.

Circle to Search

The AI-powered Circle to Search feature, available to Android users, is expanding its capabilities to solve more complex problems across physics and math word problems. This feature enables users to get instant answers using gestures like circling, highlighting, scribbling, or tapping, making it more natural to engage with Google Search from anywhere on the phone.

Moreover, Circle to Search is now enhanced to better assist kids with their homework directly from supported Android phones and tablets.

Pixel 8a

Google couldn’t wait until I/O to show off the latest addition to the Pixel line and announced the new Pixel 8a last week. The handset starts at $499 and ships Tuesday. The updates, too, are what we’ve come to expect from these refreshes. At the top of the list is the addition of the Tensor G3 chip.

Pixel Slate

Google has launched its Pixel Tablet, known as Slate, which is now available for purchase. It’s worth noting that Brian reviewed the Pixel Tablet around the same time last year, focusing solely on the base. Interestingly, the tablet is now available for purchase without the base.